Tracing Claude Code sessions

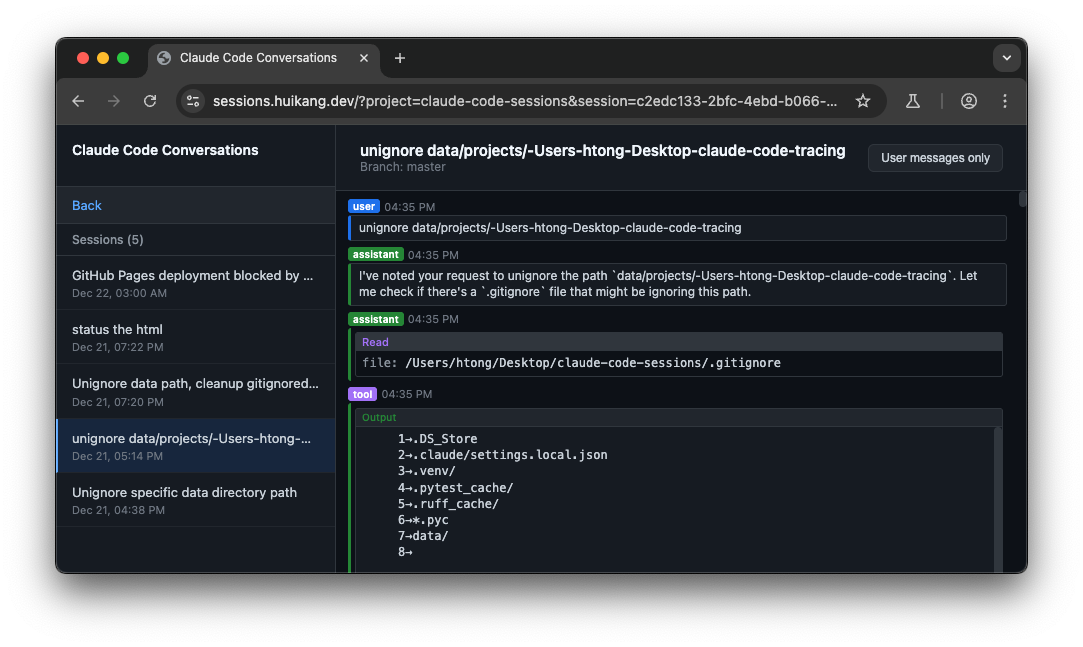

I have built yet another1 visualizer for Claude Code sessions - sessions.huikang.dev

This is a feature walkthrough

- Browse projects and sessions in a sidebar, view conversation transcripts with color-coded messages (user, assistant, tool)

- Claude Code may invoke subagents via the Task tool. You can see the subagent interactions displayed inline within the parent session

This was built23 with Claude Code itself. The code is here.

Why I built this

- Persist and visualize my own Claude Code sessions in one place

- Share how I prompt Claude Code (and my views on prompting)

- Deliver some views on how evaluation (and training) could be done

How I prompt Claude Code

I want to take the opportunity to share how I prompt Claude Code. As Claude Code improves, some of these prompting methods will perform better, and some of these prompts will no longer be necessary.

Immediately send in the first instruction

In this example, I ask Claude Code to whitelist traces belonging to projects that I want to publish. To do this, Claude Code will need to edit gitignore. Claude Code will also need to write and run a cleanup script to remove the files that are not gitignored so that what I see locally is what I see when I publish the project.

Instead of writing my instructions all at once, I break down my instructions and submit them separately.

My first instruction is just to edit gitignore to allow a certain project to be tracked. I let Claude Code understand the codebase and carry on with the task. Then I inject another instruction to write the cleanup script.

Breaking down instructions lets your agent start working sooner. The shorter your first instruction, the earlier the agent can start working. The second instruction that you write can depend on how the agent is performing.

For this, both the interface and the model should be steerable and immediately helpful. Claude Code allows this, I can send messages to Claude Code while Claude Code is working on the problem. The messages will be queued and delivered when Claude Code finishes a tool use4. However, I still often see Claude Code dropping my instructions.

Invest in communication

If I find myself taking a lot of effort to tell Claude Code what I want, I should think of ways to make this simpler.

When the first prototype is done, I find it very difficult to describe issues to Claude Code. I had to give instructions to Claude Code to navigate to the second session and the message with a certain substring. Alternatively, I take screenshots and upload the screenshot to Claude Code, and Claude Code will need to reverse engineer the screenshot to figure out which message I am talking about.

I should not tolerate such bottlenecks in communication. Therefore, I asked that “navigating the page should change the query params”.

Now I no longer need to take screenshots, I just pass in the URLs. Claude Code could also use the same URLs to show that it has completed its work. The early investment to improve communication is worth it.

Ideally, when appropriate, Claude Code should take the initiative to make communication easier. I have plenty of experience generating html files so I know what the good practices are, but people who are new do not.

Ask for proof of work

Instead of me checking Claude Code’s work, I ask Claude Code to show the proof that it is working.

In this example, I asked Claude Code to display the agent trace within the session itself. The result should look something like http://localhost:44043/?project=claude-code-tracing&session=10d53ddc-6406-4bed-a32b-da56257311f1&msg=deefa37a-9f1b-4466-abcf-44786f2b8183 where I can visit and inspect the outcome.

The benefit of doing this is that you can get Claude Code to check its own work. If Claude Code could not find a proof of what you asked is working, Claude Code is expected to figure out what went wrong. This saves my time checking Claude Code’s work and asking Claude Code to fix the issues.

Claude Code should take the initiative to produce the working example without me asking.

Let Claude tell you what is the issue

Instead of describing the issue to Claude Code, I show Claude Code the place where I find there is an issue, and I ask Claude Code to describe the issue to me.

In this example, Claude Code rendered the agent messages inside a tool message in the main thread. However, the rendering is horrible, the text in the agent messages is hardly visible.

Instead of describing the issue to Claude Code, I asked Claude Code on “tell me what you see in (url)”. Claude Code opens up Puppeteer, goes to the URL, and describes the issue to me5. I then ask to fix one of the issues that it has surfaced.

This saves me time describing issues — Claude Code can look into the problem faster than I can explain it. If the issue that Claude Code accurately surfaces aligns with the issue you want to fix, you can be more confident that Claude Code understands your assignment. There might be other issues that you are not aware of, and this interaction provides an opportunity for you to ask to fix the other issues as well.

For this to work, Claude Code needs to have good product taste.

How evaluation could be done

Usually coding performance is represented as variants of SWE-bench. I describe how SWE-bench is different from real world coding scenarios.

These are some things you should observe with my coding sessions

- My instructions are messy. I can ask for one thing, but ask for something completely different right after. Claude Code needs to be able to track all my instructions and address them all.

- My instructions need to be interpreted. The words from me need to be corroborated with the context found in the code.

- The description of the environment is incomplete. The model may not be served all the information. It is up to the agent to discover, and also decide to spend tokens in discovering.

- The reward needs to be inferred. When the session ends, it might be that the task is complete. It might be that I have given up and copied the entire conversation to Codex.

Sandbox benchmarks like SWE-bench are different from real world coding scenarios

- Instructions are precise. There is usually only one task that is to be solved. There are instructions on how to reproduce the issue.

- Instructions are clear. There is only one way to interpret the instruction.

- The environment is reproducible.

- The reward is clear. Benchmarks have processes to calculate the performance of the model. If the model is tasked to fix an issue, there are held-out test cases that the model needs to pass. If the model passes the test cases, the model succeeded, otherwise not.

Evaluating the model in a sandboxed benchmark is straightforward, you just run a script. Of course, there is a lot of work in curating the tasks for SWE-bench, and good taste is needed for good tasks.

Evaluating the model against coding sessions is more involved. There is no ground truth here. There is no script to run to confirm whether the task is correctly done. You cannot reproduce the user’s environment because you do not have access to the environment6.

You will need to create your own standards on what is good and what is bad. The remainder of the coding sessions could provide clues, but you still need to have your own judgment.

This is how I expect evaluation to be done for one model.

- You have one reference prompt-completion pair from the session. The reference completion is one turn taken by the model. The reference prompt is all the tokens before the reference completion.

- You get the model to generate the new completion from the reference prompt.

- You grade whether the new completion is better than the reference completion. You have a process that calculates this (will be elaborated)

- The new completion can be graded to be better, equal or worse than the reference completion.

This is how you can grade whether a completion is better than the reference completion

- You look at the remainder of the session of the reference session for the mistakes that the reference completion is causing.

- You check whether the new completion is making the same mistakes.

- You also look at the remainder of the session of the reference session for the progress that the reference completion is making.

- You check whether the new completion is also advancing the task at a similar amount with the similar token budget.

- You produce an overall judgment.

Notice what I described here involves comparing a new completion with a reference completion. The reference completion has access to the remainder of the sessions, whereas this is not the case for the new completion. You access the remainder from the new completion because you cannot fully reproduce the environment that created the reference session. If you want to compare two new completions, you need to design the process slightly differently. One simple way to do this is to compare two new completions against the reference completion and decide among the two new completions which one is better, or is it a tie.

Once you can evaluate, you can train. You train the model to produce more of the better completion on the reference prompts.

Footnotes

-

I think LangSmith was the first major platform to build a feature to trace Claude Code. While I was building this over the last weekend, Braintrust followed up shortly after. Simon Willison made

uvx claude-code-transcriptson Christmas Day. ↩ -

I was using

@modelcontextprotocol/server-puppeteerto manipulate the browser. According to the npm site, the package is no longer supported. As I write this, I found out that I can use@playwright/mcpinstead. ↩ -

It is unlikely that I will include more sessions. The main objective of making this prototype is to write this blogpost, which has been achieved. I will only add sessions if I have something I want to explicitly share - for example the methods on prompting Claude Code or to reproduce a certain bug with Claude Code. It is also likely that I will move on to a different prototype. Claude Code may eventually build this natively, making this prototype obsolete. ↩

-

Even so, there are times where Claude Code could ignore your submitted messages. The harness should have provided a to-do list where it asks the model on whether all the instructions of the user have been addressed. ↩

-

In this case, Claude Code tells me a bunch of issues but misses the most obvious issue: the box highlighting is overpowering the text and making the text unreadable. This is not ideal, but has provided me a useful signal that Claude Code does not yet understand the assignment. ↩

-

This is similar to our experiences as well. You cannot go back in time, try something else, and see if things turn out better. You can only imagine what would have happened. You can only evaluate your actions with limited data, and you need learn from this limited data. ↩